| [Code] | [Slides] |

|

|

Exploration is always preferred while mapping because the robots can autonomously generate a map without any human effort. The classical way of exploration is to get frontier points on edges of generated occupancy grid and forwarding those points as goal to the robot so that it can traverse to the point and explore that area. There are several open-source ROS packages available for this approach, like explore_lite and frontier exploration.

In our approach, we are using RRT (Rapidly Exploring Random Trees) Exploration. Here, modified RRT algorithm is used for detecting frontier points, which has proven to be much faster than standard exploration. In this algorithm, we run two different ROS nodes for exploration:

This algorithm runs until the loop closure condition in global map is not satisfied. Here, we assume that the surface of the environment always forms a closed loop and the algorithm stops once it can’t find any open frontiers in the current map, denoting that all the traversable parts of the terrain are mapped, and no more global frontiers are found. More details about this ROS package can be found on Wiki.

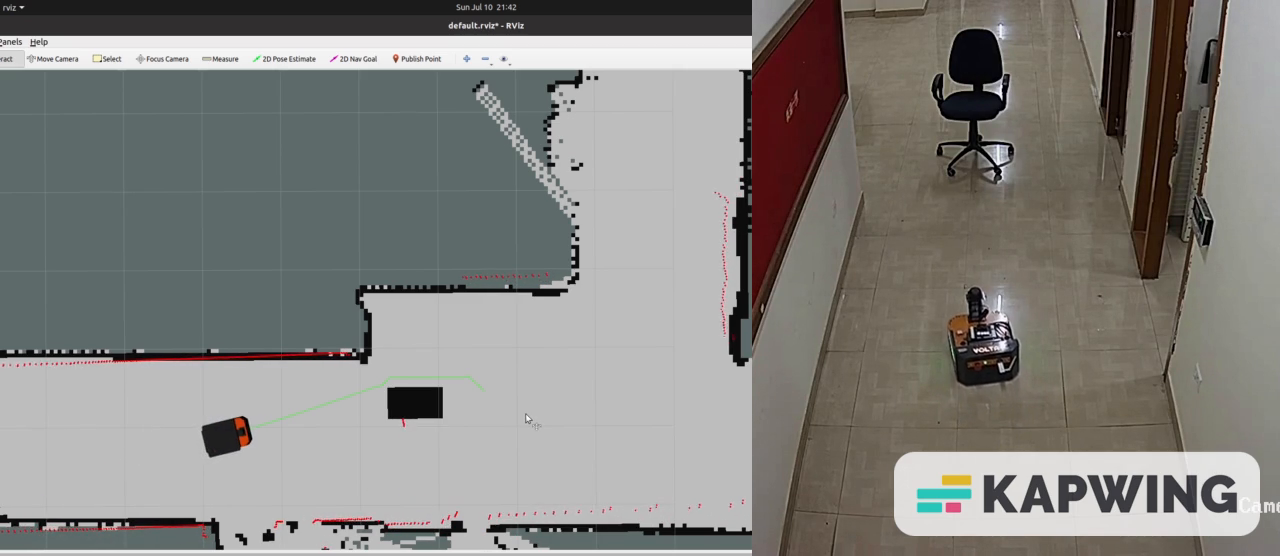

Ground robots are very commonly used in warehouses and factories where the environment is never static and it keeps changing. Hence, we want to create a system which can dynamically change the global costmap whenever there is any change in the environment.

For this purpose, we are creating an Object Detection and Map Update pipeline.

Here we are using 3D Object Detection instead of standard 2D object detection because for accurately updating costmap, we need object's position as well as an estimate of its dimensions in the real world, and 2D object detection can't provide us that information because it returns a 2D bounding box, whereas 3D object detection can provide that information because it returns a 3D bounding box in camera'a view frame.

For our purpose, we are using Mediapipe's Objectron module. It is an opensource module which can detect objects like chairs, shoes, coffee mugs and cameras.

|

|

|

|

|

|